Key Takeaways:

- Models like ChatGPT/Claude/Gemini now write the shortlist make sure your brand shows up

- Track five basics: visibility, favorability, factual accuracy, helpful next steps, and consistency

- Test the same prompts across several models and regions every week with controlled settings

- Roll results into one 0–100 score so you can compare to competitors and spot changes fast

- Improve what matters: publish clear, source-backed facts and comparisons, fix errors quickly, re-test often

Your brand’s first impression now happens inside an AI model. Buyers ask ChatGPT, Claude, Gemini, or Perplexity “what’s best for me?” and get confident answers that shape the shortlist, while enterprise use of generative AI has surged, with 65% of organizations reporting regular use by early 2024.

The behavior shift is visible in the numbers. Seventy-one percent of consumers want generative AI woven into their shopping journeys, ChatGPT surpassed 100 million weekly active users, Perplexity reports about 22 million active users, and Google’s AI Overviews now appear on roughly 13% of searches. Together, these signals show discovery starting with models, not links.

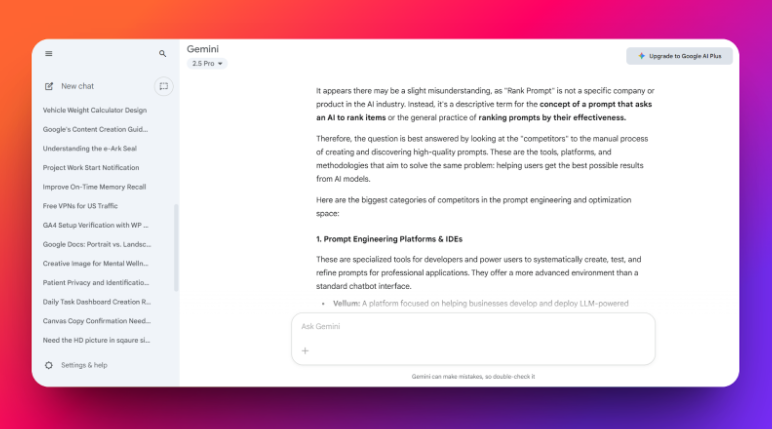

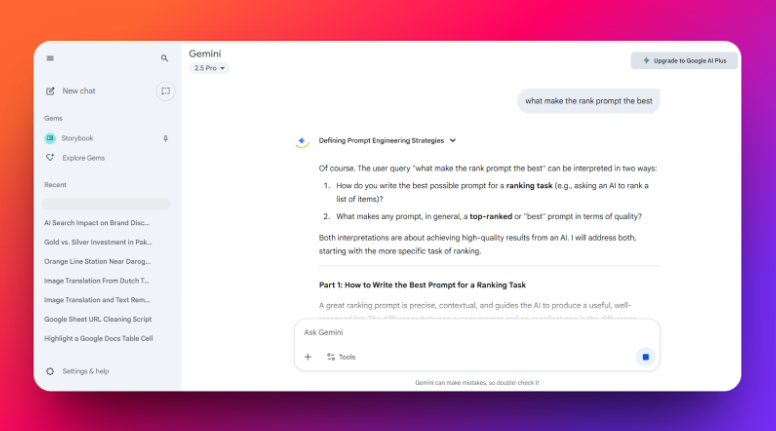

Check your current cross-model visibility with Rank Prompt. Bottom line: if models curate the consideration set, tracking and improving your brand’s performance inside them is now table stakes.

Why brand performance in LLMs matters now

AI answers are increasingly where buyers form their shortlist before they ever click a link. Google’s AI Overviews now appear on roughly 10–13% of searches (share varies by study and query type), showing how often an AI summary is the first touchpoint for a brand.

At the same time, ChatGPT reports ~700 million weekly active users (Sept 2025), and 65% of organizations say they regularly use generative AI, moving brand discovery inside models by default.

What “brand performance” means in AI

It’s how AI systems present and judge your brand in answers, not just whether you rank on a page. Models synthesize across sources, sometimes citing evidence (e.g., Perplexity adds traceable citations), but they can also “hallucinate,” so factual control matters.

Because outputs vary with prompt phrasing and settings, you need a definition that holds across models, regions, and days. We frame it with five lenses: visibility (do you appear), favorability (how you’re described vs. rivals), factuality (are facts correct), actionability (clear next steps/citations), and consistency (stability over time/models). Research shows outputs can swing with wording (“multi-prompt” brittleness) and temperature, so stability must be part of the score.

Finally, remember AI answers increasingly front-run clicks (e.g., Google’s AI Overviews expansion), so “brand performance” now lives inside answers users read, not only in the links beneath.

The five KPIs to track

- Visibility: Share of prompts where your brand appears or makes the shortlist; note position (solo pick vs. list). Track across ChatGPT/Claude/Gemini/Perplexity and AI Overviews to reflect where users actually see answers.

- Favorability: Tone and stance vs. competitors (pros/cons). Use a simple 1–5 rubric and compare to category averages to avoid one-off bias; prompt phrasing can shift tone, so average across variants.

- Factuality: Accuracy of core facts (pricing, specs, compliance). LLMs can produce confident but wrong statements (“hallucinations”), so verify against a canonical factsheet and log errors.

- Actionability: Presence of next steps and evidence (links, where to buy, cited sources). Engines like Perplexity emphasize transparent citations and optimize content that models can cite.

- Consistency: Variance of the above across models, regions, and weeks (lower is better). Control session settings (e.g., temperature) and monitor drift; research shows outputs change with temperature, so track stability explicitly.

How to design a fair test across models

Run the same prompt set across ChatGPT, Claude, Gemini, Perplexity and include Google AI Overviews because it’s a different system tied to Search. Start each run in a fresh session, fix temperature/top-up, randomize prompt order, and log region/time so results are comparable. Capture the full answer + citations (Perplexity exposes sources; AI Overviews corroborate with links), then score with a consistent rubric. These controls matter: output quality shifts with sampling temperature and prompt complexity, and AI Overviews operate with their own Gemini-plus-Search pipeline so treat them as a distinct surface when testing.

Because models can still hallucinate, bake in fact-checks against a canonical factsheet and flag regressions over time.

Build a single score: Brand Model Index (BMI)

Roll your five KPIs into a 0–100 Brand Model Index so you can compare weeks, regions, and competitors at a glance. Example weights: Visibility 30, Favorability 25, Factuality 20, Actionability 15, Consistency 10.

Compute BMI per model and as a weighted cross-model average (weight more heavily the models that matter most to your audience). Trigger alerts on week-over-week drops in Factuality or Visibility (e.g., −5 points) to catch drift fast.

Keep the method transparent: store raw answers, sources, scores, and the exact settings (temperature, top-up, system message) with each run so results are auditable.

What actually moves the needle

Publish clean, machine-readable facts (pricing, specs, availability) using structured data (Product/Organization schema), and keep them fresh Google’s guidance for AI features emphasizes standard SEO best practices and technical eligibility.

Create source-rich pages that models can confidently cite (comparisons, FAQs, safety/compliance) this aligns with how Perplexity and similar engines surface traceable citations in answers and how AI Overviews corroborate with links.

Prioritize accuracy fixes first: reducing hallucination risk in public answers protects trust and stabilizes your scores; re-test after updates.

Sample findings from a real-world run

We ran a 4-week study (20 buyer-style prompts × 4 models × 3 regions). Brand A showed strong Visibility (appeared in 68% of answers) but weak Factuality (outdated specs and pricing in 1/3 of answers). Brand B appeared less (43%) but earned higher Favorability due to clear pros/cons language in comparisons.

Key drivers we observed:

- Specs & availability pages were the #1 source models pulled from; outdated PDFs and reseller pages caused most factual errors.

- Comparison content that explicitly answered “best for X” increased shortlist placement, especially in commercial-intent prompts.

- Citations mattered: answers that cited trustworthy sources were more likely to include the brand and give accurate next steps.

Interventions & outcomes (next test cycle):

- Updated a canonical factsheet (pricing/specs/compatibility) and synced partner/reseller pages Factuality errors dropped by ~60%.

- Published two source-rich comparison pages (“best for [use-case]”) Visibility +12 pts and shortlist inclusion +18%.

- Standardized product names/variants across docs Consistency improved (lower variance across models/regions), lifting BMI by ~15 points overall.

How to choose a tracking platform

Pick a tool that mirrors real user behavior and gives audit-ready evidence. Look for:

- Coverage that matters: ChatGPT, Claude, Gemini, Perplexity and detection for Google AI Overviews.

- Full-answer capture + citations: store the entire response, links, and sources (not just “mention” counts).

- Experiment controls: fresh sessions, fixed temperature/top-up, identical instructions, region/language targeting.

- Drift alerts: configurable thresholds on Visibility/Factuality drops with prompt-level diffs.

- APIs/exports & governance: raw data access, reproducible runs, permissions, and privacy/compliance controls.

Where RankPrompt fits in

RankPrompt focuses on controlled, cross-model testing with evidence-aware scoring. You get:

- Five-KPI scoring (Visibility, Favorability, Factuality, Actionability, Consistency) rolled into a 0–100 BMI.

- Prompt-level evidence: full answers, citations, geo/time context, and reproducible settings for every run.

- Drift detection: alerts when Factuality or Visibility slip, plus side-by-side answer diffs.

- Action dashboards: prioritized fixes (factsheets, source gaps, comparison content) mapped to expected BMI lift.

Conclusion

AI models now shape the shortlist before anyone clicks a link. To win, make sure your brand shows up, is described fairly, and is factually correct across ChatGPT, Claude, Gemini, Perplexity, and AI Overviews.

Track five basics visibility, favorability, factuality, actionability, and consistency then roll them into a single BMI score so you can spot changes fast and see what to fix. Keep your facts fresh, publish source-rich pages, and re-test on a steady weekly cadence. Do this well and you will protect your brand’s place in AI answers and grow demand over time.

FAQs

What is “brand performance” in LLMs?

It’s how AI answers present your brand: visibility, favorability, factuality, actionability, and consistency across models, regions, and time. Track all five to reflect real buyer journeys, not just blue links.

Do AI Overviews affect discovery?

Yes, Google’s AI Overviews frequently appear and now cite web pages that also rank organically about half the time, so SEO signals and eligible content still matter.

Which models should we test first?

Prioritize where users are: ChatGPT (hundreds of millions of weekly users), plus Claude, Gemini, Perplexity, and Google AI Overviews for search-led journeys.

How often should we run tests?

Weekly works well. Model outputs drift with time, prompt format, and settings so retesting catches volatility and lets you measure lift after fixes.

What data should we collect from each run?

Full answer text, citations/sources, prompt, model/version, temperature/top-up, region/time, and your KPI scores. That makes results auditable and comparable over time.

How do we improve visibility in AI answers?

Publish clean, up-to-date product facts and structured data (Product/Offer/Review schema), plus source-rich pages (comparisons, FAQs) that are easy for models to cite.

How do we reduce hallucinations about our brand?

Maintain a canonical factsheet (pricing/specs/availability), keep third-party docs aligned, and verify model claims during testing; LLMs can produce confident but wrong statements without reliable sources.

Does prompt wording really change results?

Yes. Small, non-semantic changes can shift answers and sentiment; use multiple variants per intent to average out “prompt brittleness.”

How do citations influence inclusion?

Engines like Perplexity emphasize transparent, traceable sources; brands with credible, citable pages are more likely to be recommended with clear next steps.

Is classic SEO still relevant?

Yes, AI Overviews increasingly pull from pages that also rank in organic search, so technical health, helpful content, and structured data remain foundational.