Key Takeaways:

- Focus on being cited in AI answers, not just ranking in blue links.

- Write answer-first pages: short summary up top, then steps, pros/cons, and a few natural FAQs.

- Make topics and names clear and consistent so AI knows who/what you mean.

- Add helpful schema (Article/HowTo/FAQ/Product), keep pages fast, crawlable, and updated.

- Show evidence with sources and data, and refresh important pages regularly.

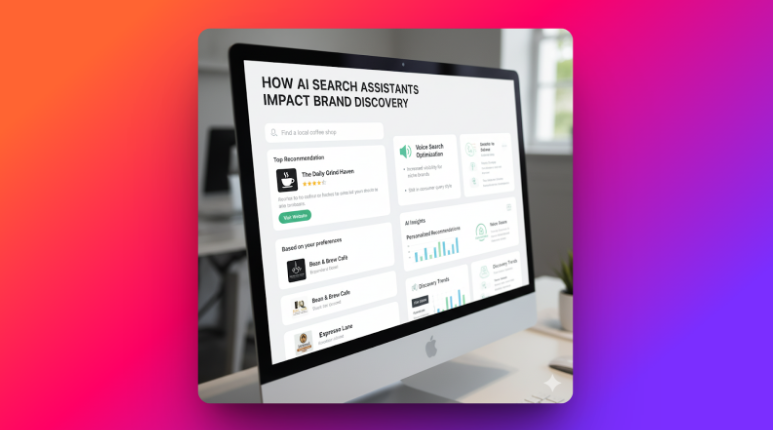

AI answers now sit where your clicks used to. Google’s AI Overviews and AI Mode, Microsoft Copilot Search, and engines like Perplexity summarize the web up top and cite sources, shifting SEO from “rank to click” to “be selected and cited.” Copilot grounds responses via Bing on the open web or custom-scoped sources, so clean information architecture and evidence-rich pages matter more than ever.

The numbers are real: Semrush finds AI Overviews in ~13% of U.S. desktop queries (March 2025) and rising; BrightEdge reports ~54% overlap between AIO citations and top organic results, so classic signals still count. Profound’s 30M-citation study shows each engine favors different sources, while industry reporting links AIO to click displacement for publishers. Bottom line: more summaries up top, mixed attribution, and measurable traffic impact.

This guide shows how to make your pages the ones these systems choose, cite, and trust so you keep earning visibility and conversions even when the answer happens before the click. Start with RankPrompt or explore the Platform.

What AI answers are

They’re short summaries that search tools generate at the top of results, built by large language models and grounded in web pages they find relevant. Google says its AI features follow the same core SEO principles; there’s no special markup to “opt in,” but clear, helpful content improves inclusion.

Microsoft explains Copilot can ground answers on the open web through Bing (or custom-scoped sources), then compose a response, so clean site structure and clear sections help your pages get used. Perplexity also highlights live web retrieval with inline citations in our Use Cases.

Why this matters now

Independent tracking shows Google’s AI Overviews now appear in about 13% of searches, mostly informational queries so more users see a synthesized answer before they scroll.

BrightEdge reports 54% of AIO citations now overlap with top organic rankings, meaning classic SEO signals still influence which sources get cited. At the same time, industry reports and publisher groups highlight traffic shifts and uneven accuracy, so being a clearly cited, trustworthy source is key.

How sources get picked

AI answers pull from pages that look relevant, clear, fresh, and trustworthy then synthesize and cite them. Google says there’s no special “opt-in”: the same SEO basics apply, but content must directly answer the query and be easy to parse.

Bing Copilot describes “grounding” on the open web (via Bing) or a custom-scoped search. That means clean information architecture, descriptive headings, and concise sections make your page easier to select and quote.

Studies also show AI Overviews increasingly cite pages that already rank well (now ~54% overlap), so classic relevance/authority signals still matter just presented in an answer-friendly format.

Be cited, not just ranked

Treat citation share as a core KPI alongside traffic. If AI pulls a summary and names you as a source, you still earn visibility and assisted conversions even when fewer people click. BrightEdge’s overlap trend suggests improving organic quality also raises your citation odds.

Write a copy that’s easy to lift: a tight intro that answers the question, short FAQs, tables/steps, and on-page sources. This matches how AI systems assemble summaries and footnotes in Overviews and Copilot.

Organize topics clearly

Build topic hubs with focused subpages, and keep names consistent for products, features, and concepts. This helps retrieval systems map your content to the right entity and query.

Link key entities to authoritative profiles (e.g., company to LinkedIn/Wikidata) and keep pages fresh. Engines and answer tools that use live web grounding or real-time retrieval favor clear, current pages with traceable sources.

Write answer-first pages

Open with a short, plain-English summary that answers the main question in 2–4 sentences. Then add clear sections, steps, pros/cons, a small table or checklist, and a few short FAQs in natural language. This makes your page easy for search systems to parse and quote at the top of results. Keep key facts near the top, and make headings descriptive so retrieval tools can match them to queries.

This layout also aligns with how engines build answers. Google says there’s no special “opt-in” for AI features; standard SEO best practices still apply so clarity and helpfulness are your lever. Microsoft explains Copilot can ground replies on the open web through Bing (or custom-scoped sources), which favors pages with clean structure and concise, verifiable sections. If your content is quick to scan and well organized, it’s more likely to be selected and cited.

Use helpful schema

Add JSON-LD that truly fits the page: Article for blogs/news, HowTo for step-by-step guides, FAQPage for genuine Q&A blocks, and Product for product detail pages. Validate markup and keep it minimal Google notes that rich features aren’t guaranteed even with schema, so focus on accurate fields and eligibility, not stuffing every type.

Pair schema with solid technical basics so crawlers can read everything: fast loads, stable layout, and clean HTML. Google’s Core Web Vitals guidance (LCP/INP/CLS) is still a practical checklist; improving these can help your content be discovered and rendered correctly, which indirectly supports eligibility for AI-driven presentations.

Prove it with sources

Back your claims with primary references, dates, and small data tables. Add a short “Sources” section so engines and users can verify key facts quickly. Tools like Perplexity are designed to surface live web citations by default, so being the clearest credible source on a topic increases the odds your link appears in the footnotes.

At the same time, audits show AI summaries can miss context or cite unevenly, which makes on-page evidence and clarity even more important. News and research roundups report accuracy and citation gaps across engines; your best defense is transparent sourcing, updated stats, and precise wording that’s hard to misinterpret when lifted into a summary.

Google AI overviews tips

Lead with a short, direct answer in the first 2–4 sentences, then give steps, pros/cons, and a tiny FAQ. Use descriptive H2/H3s, concise paragraphs, and a small table or checklist where it helps this makes your page easy to parse and cite. Keep schema right-sized (Article, HowTo, FAQPage) and your technical basics tight (fast load, stable layout, clean HTML). Google’s own guidance says there’s no special “opt-in”; solid SEO and helpful content are what qualify pages for AI features.

Refresh important pages (new data, dates, examples) and clarify entities (consistent names, definitions) so the system can connect your content to the query. Watch markets where AI Overviews are expanding and where overlap with organic results is rising. Being strong in classic SEO often increases the odds of being cited in AI Overviews.

Bing Copilot tips

Structure pages so Copilot can “ground” answers cleanly: clear headings, short sections that resolve a question, and verifiable snippets (definitions, numbered steps, tiny tables). Copilot relies on Bing web grounding (and can also use custom-scoped sources), so a tidy information architecture and up-to-date pages help your content surface.

Where you have repeatable info (comparisons, how-tos), standardize the pattern across pages. Keep claims sourced and recent grounded systems favor pages with obvious evidence and current facts. If you serve B2B audiences that use Microsoft 365, remember many tenants can toggle web grounding; when it’s on, live web content is pulled into answers, so recency and clarity really matter.

Track results and improve

Monitor a small set of priority queries weekly. Record where AI answers appear, whether you’re cited, and which paragraph or block likely got lifted. Pair this with organic rankings so you can see how overlap is changing; BrightEdge’s longitudinal data shows AIO citations now match top organic pages more than half the time, so “classic SEO + answer-first formatting” is a smart default.

Add two KPIs beyond traffic: citation share (how often you’re named in AI answers) and assisted conversions from those sessions. When you’re not cited, compare your page to those that are: tighter intro, clearer headings, fresher stats, or better on-page sources. External studies on citation patterns (spanning ChatGPT, Google AIO, Perplexity) can guide what types of pages tend to be cited and use that to prioritize rewrites.

Wrap up

AI answers are the first thing many people see. If your pages give clear, short answers backed by sources, you can still win visibility even when clicks shrink.

Track your AI citations and fix gaps with Rank Prompt. Start with the Platform, review Use Cases, compare Pricing, and partner with us via Partners.

FAQs

What are “AI answers” in search?

Short, AI-generated summaries at the top of results (e.g., Google AI Overviews/AI Mode, Bing Copilot) that pull from web pages and add citations. Google says standard SEO best practices still apply; there’s no special “opt-in.”

How do these systems pick sources?

They ground responses in pages that are relevant, clear, current, and trustworthy; Copilot explicitly grounds via Bing (open web, specific URLs, or custom search). Clean structure and verifiable sections help selection.

Do I need special markup to appear?

No, special markup is required. Focus on helpful content, good structure, and technical hygiene; schema can clarify meaning but doesn’t guarantee inclusion.

Which schema types help most?

Use what matches the page: Article (content), HowTo (steps), FAQPage (real Q&A), Product (details). Keep JSON-LD valid and minimal; rich results are never guaranteed.

Will AI answers cut my traffic?

On affected queries, many publishers report fewer clicks as answers satisfy intent up top; visibility shifts from “position” to “being cited.” Your hedge is to be the source that gets referenced.

How do I structure pages to be cited?

Lead with a 2–4 sentence answer, then steps, pros/cons, and a small table or checklist. Add brief FAQs in natural language. These formats are easy for engines to lift.

Do FAQs really help?

They make questions–answer pairs explicit for retrieval and can be marked up with FAQPage when genuine. This improves machine understanding (though rich features aren’t guaranteed).

How do I measure success beyond rankings?

Track citation share in AI answers, brand mentions in summaries, and assisted conversions. Studies show platforms have distinct citation patterns optimized to how each cites.

What’s different about Perplexity vs. Copilot?

Perplexity emphasizes real-time web retrieval with visible citations; Copilot grounds via Bing APIs and can scope to custom sources. Both reward clear, current, well-sourced pages.

Do speed and Core Web Vitals still matter?

Yes, fast, stable pages help crawlers and users, reinforcing eligibility and understanding for AI features. Treat LCP/INP/CLS improvements as foundational maintenance.